1 Introduction

1.1 What is Big Data?

The increasing popularity of the Internet of Things (IoT) devices in the field and the continuous advancements of data-gathering techniques have significantly increased the amount of relevant information. The data size usually reaches Petabyte ( = 1024 Terabyte) or Exabyte ( = 1024 Petabyte). Big Data is a buzzword defined as increasing volume, variety and velocity of data (Figure 1) and a concept used to describe an immense volume of both structured and unstructured data that is so large it is difficult to process using classic software techniques and databases.

Figure 1. Source: https://algorithmia.com/blog/wp-content/uploads/2020/03/image2.jpg

The entire Big Data processing structure is set up in a manner that allows large volumes of information to be processed and recovered more quickly and easily than a conventional database, which stores various information fragments in different locations, merging the information into a single report as needed. Big Data analytics is far more efficient than that, and, for others within this area of knowledge, it is in fact access to large volumes of divergent kinds of data, which are then fed to machine learning algorithms to discover unidentified relationships [1].

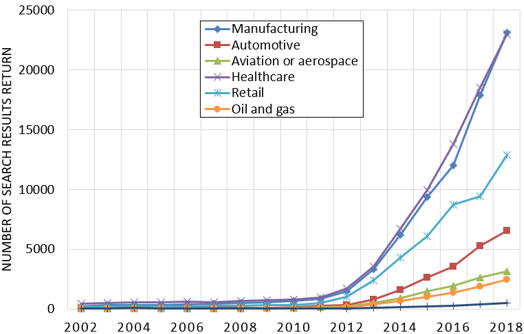

With the rapid development of technology and industry, Big Data is a popular strategy now, and it has become a link for different disciplines. Making the most out of data is a key part of successful financial performance for an energy firm. However, Big Data has traditionally been regarded by the oil and gas industry as a term used by “softer” industries to track people’s behaviours, buying or political tendencies, interests, etc. As a consequence, the promotion of Big Data technologies in oil and gas lags behind other sectors. We can suspect the main reason behind this phenomenon is that the connected services and networks associated with its deployment raise many questions surrounding areas of cybersecurity and data privacy.

Figure 2. Google search results for “Big Data” in each industry. Source: https://www.researchgate.net/figure/Google-search-results-for-big-data-in-each-industry_fig2_339808583

Big Data Analytics is becoming one of the critical chapters in the digitalisation of the oil and gas industry. It is about managing and processing an extreme volume of data to improve operational efficiency, to enhance decision making and to mitigate risks in the workplace [2]. Essentially, this process generates more revenue for the industry. But it takes some advanced hardware and software to analyse Big Data properly; these tools allow an industry to evaluate millions of bytes of data for fast conclusions. For instance, Artificial Intelligence (AI) analyses inputs to learn and upgrade its sorting processes over time, using data — generally coming from Big Data Analytics — to provide an accurate diagnosis or a decision-making framework.

1.2 Artificial Intelligence, a business imperative

AI is the development and application of computer systems qualified for logic, reasoning, and decision-making capabilities. Using visual perception, experience recognition, and language translation, this self-learning technology analyses data and output information in a more efficient way than human-driven methods.

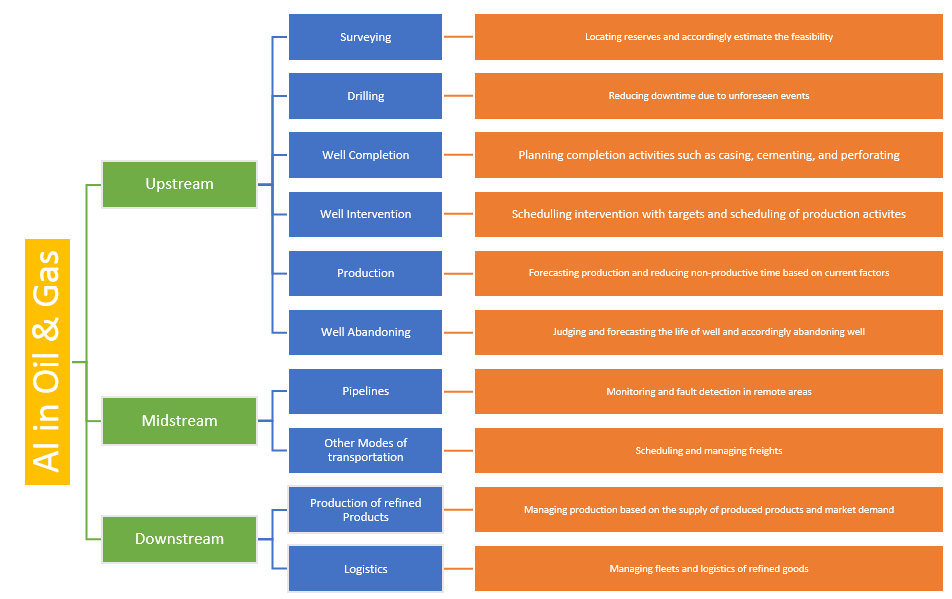

The development of artificial intelligence solutions for the oil and gas industry aims at augmenting and enhancing human knowledge and expertise in exploration and production. Exploration and production have always been technology-intensive and forward-looking into new ways to improve operations and reduce exploration risks, so naturally, it was where the highest demand for AI solutions had been seen. Although the technology was initially approached with curiosity and some degree of scepticism, today most companies have incorporated AI initiatives.

Figure 3. AI architecture in oil and gas. Source: https://bigdata-madesimple.com/wp-content/uploads/2019/02/image1.png

AI tools build fast using either open-source tools like Python or TensorFlow, on the one hand, and APIs, on the other. Of course, AI solutions require significant computing power, and thus, deep learning, neuromorphic chips, and high-performance architecture clouds are progressively being adopted in oil and gas.

Artificial intelligence is a very strategic domain, and there is a consensus that technology is a crucial lever and enabler in unlocking substantial business value. It is truly becoming a business imperative in the industry. Throughout this article, we will see sets of solutions spanning across the energy value chain from exploration to health and safety.

1.3 Building solutions for the oil & gas industry

The new generation of wireless networks, the improvements of ubiquitous computing devices, and advanced storage capabilities have been opening more possibilities for real-time applications, such as remote oilfield monitoring. Also, predictions indicate that oil demand will keep rising in the next two decades, which may result in a significant gap in the petroleum supply [3]; this prospect stresses the role of innovation in enhancing the efficiency of the production chain to meet the increasing demand.

Unlike other industries, dealing with large quantities of data is not a new issue for the oil and gas sector. Companies have generated and used a lot of data over the years to study the surface of the earth, logging-while-drilling, monitoring and maintaining pipeline systems, forecasting the price trends of various energy sources, and even understanding the volatility of financial markets. Throughout the whole process, oil and gas companies have compiled a lot of data. But when it comes to new digital technologies, in particular, the sophisticated use of data, the industry is beginning to realise that there are many untapped opportunities. They have yet to take advantage of, and these opportunities could lead to huge potential savings.

Figure 4. Big Data applications in the oil and gas industry. Source: https://ieeexplore.ieee.org/ielx7/6287639/8948470/9028154/graphical_abstract/access-gagraphic-2979678.jpg

Big Data, coupled with AI, is already delivering results and material improvements are being witnessed in several areas. On average, prediction accuracies for either equipment failures or process outcomes improved from 60 or 70 per cent to more than 80 per cent, 20 to 30 per cent of workforce productivity improvements that drove between 5 and 15 per cent efficiency gains and corresponding cost reductions [4]. Likewise, 70 to 90 per cent acceleration achievements are reported in decision-making, especially in knowledge-intensive domains like Geoscience and Engineering.

When businesses start digitising the process of resources discovery and energy distribution, more and more data will be produced and gathered. Energy companies will more and more use big Data to derive more benefit from the upstream and downstream supply chain and increase total productivity and revenue.

2 Big Data for Geology and Exploration

2.1 The pathway to a smarter mineral exploration

As the industry’s focus shifts from production to long-term profitability, more companies are looking to gain deeper subsurface insights from their data and to do so, they must have straightforward access to that data.

One prominent feature of the oiling drilling process is using large and complex data in real-time. Big Data can allow an oil & gas exploration companies to visualise their drilling environment on a computer screen through promptly analysing large amounts of data. This research would then be used to lead instruments such as drills in the best way possible while enhancing the production process by up to 8% [5]. This efficiency upgrade is not something that should be ignored.

As companies drill for oil, they can perform complex calculations on the massive amounts of data, including information, such as the seismic properties of the drilling area or the temperature levels. Knowing all of this information increases the effectiveness of the drilling process in terms of time and money spent.

2.2 Seismic interpretation

Seismic interpretation is critical for the success of exploration. It is a knowledge-intensive use case and very dependent on tacit knowledge of geologists. Results of interpretation vary by individual, their knowledge, the context in which the analysis is performed. That makes it an almost perfect case for applying more unbiased systems, which could not only help deliver consistent performance in seismic data interpretation but also provide a log of decisions to know more about the reasons behind specific interpretation outcomes. Having a system that continuously improves its ability to interpret the data under the guidance of experts in a company has been the primary motivation behind building that sort of solution.

Figure 5. Pre-stack seismic data for interpretation and analysis. Source: https://sharpreflections.com/wp-content/uploads/2015/11/force.jpg

Artificial Neural Networks (ANNs) are the machine learning technology used most in the oil and gas industry [6]. They can process vast quantities of data to extract patterns and information that cannot be detected by the human brain. A new wave of decision-supporting solutions, including self-growing and multi-input/multi-output neural networks for rock type prediction and classification, culminated in recent developments in machine learning products. They can train neural network algorithms on input as divergent as pre-stack data and lithology logs, and provide users with qualified “most probable” seismic classification volumes.

For example, Galp, a Portuguese oil and gas company, and IBM have been developing a solution that interprets pre-stack seismic data and describes geological structures and characteristics of those [4]. Notably, the system performs this with the awareness of the geologic context — structural geometry, depositional environment photography — and even more importantly, it takes into account notes from previous investigations. In such a way, it continues building on prior knowledge, which is probably its most important characteristic.

2.3 Reservoir analogues

Solutions relying on Big Data are being developed to accelerate prospect identification and to reduce uncertainty when analysing a new reservoir. To do so, a series of machine learning algorithms are applied to identify and recommend missing reservoir properties based on reservoir analogues and sophisticated user interfaces begin to allow advanced visual analysis. In addition to extracting numerical data from analogues, these systems are also extracting relevant geological information from internal and external reports and geological papers.

In the case of Repsol, this tool is analysing over 60,000 reservoir analogues and almost half a million of geological documents [4] and what is more, it allows over 80 per cent faster discovery of relevant Geoscience information. Such an amelioration comes incredibly handy at times where a decision needs to be made quickly about new lease, acquisitions, or expansion. There are strong indications that those capabilities provide a noticeable competitive advantage for oil and gas companies.

2.4 Shale sweet spots

A relatively new addition in the Big Data and AI industry toolkit is a heat map for production prospects. It has been developed specifically for unconventional shale operators, and it is used to identify economic production location — the heat map — for acreage owned by an operator. In other terms, there is a solution used to determine the optimal producing well location, potentially driving greater production output from the acreage.

Technically, this type of tool extracts key production parameters from well logs, both conventional vertical wells as well as currently producing horizontal wells, and automatically conducts spatial interpolation. Those key production characteristics are used in subsequent machine learning models that predict production outcomes based on well log data and geology. Well completion specifications can be integrated, not to optimise completion parameters but for recognising the impact of different fundamental completion characteristics on the production volume from the field. These tools are also capable of analysing the yielding capacity at each production layer, so providing a three-dimensional view of the property’s production potential.

Originally, shale sweet spot finding tools were designed to support operators with the acreage they owned. But increasingly they are being applied for acquisitions, where the target acreage — adjacent or elsewhere — is being assessed using its production estimation potential. Sweet spots finders can be deployed very quickly. They are pre-configured by some cloud vendors, and as long as they could either receive the operator’s data, well-log data, or the system itself can access the sources where the data is stored, the solution can be tested immediately. So your users have a chance to see the results. And in terms of deployment, because it is a cloud-based solution, it is much faster than the critical on pram situation. Also, the cost is per usage rather than an initial upfront, which makes it quick and easy to adopt in an operating environment.

Figure 6. Finding sweet spots in shale reservoirs. Source: https://youtu.be/5usnf9twqDY

3 Big Data for Site Development

3.1 Analysing large sets of documents

Analysing fairly sizeable well-delivery documents from a large number of wells informing an operator how to prepare drilling operations is a complex and resource-intensive activity. Big Data and AI can be applied to deal with this amount of data — for example, Woodside reports over 80 per cent efficiency improvements in its operations [4].

Traditionally, each dot representing a well within a graphical user interface had a pile of information associated with it — a technical assistant would get together those documents, print them out, and there would be time for an old-fashioned reading session for the next few weeks. Now, drilling engineers and scientists can just draw a circle on a map, and the work is done: every drilling event for every well in that location is summarised by depth, with unstructured data turned into structured data for further analysis.

Woodside, the largest Australian natural gas producer, established a data science and cognitive intelligence team in 2015. Since then, deep learning artificial intelligence platforms have been responding to queries from its staff, rapidly sifting, sorting, and surfacing decades of data and company knowledge. The company proudly claims to have processed the equivalent of over 600,000 A4 pages through the application of advanced analytics and cognitive computing in their operations. Now, in the words of Woodside’s Head of Cognitive Science, they went from 80 per cent of the time looking for the information and only 20 per cent of the time figuring out what to do about it, to completely flip that equation [7], [8].

3.2 Drilling

Another classic application of machine learning tools to site development activities is drilling; it is to monitor the reliability of operations or processes. Predictive drilling advisors are based on monitoring near-real-time drilling sensors and being able to predict the incidence in a much longer time horizon than the current systems allow, extending it from approximately half an hour to almost two hours. The core of this business solution is about their ability to save millions of non-productive time that results from stack pipe or other incidents.

Weatherford has recently developed a digitalisation solution for well construction optimisation. After acquiring high-resolution data from multiple sources — geological and geophysical data, rig site, offset well databases, and even third party applications — it transfers the data over a secure network to one centralised cloud-based repository. Then, a web-based interface lets drilling experts interact with complex data sets and displays advanced 2D and 3D renderings across numerous wells. Features such as smart alerts, intelligent algorithms, and real-time engineering models support simulating downhole conditions and anticipating hazards.

3.3 Autonomous well management

Using real-time data and technical models, a mix of lift control, fluid optimisation and IoT infrastructure can achieve continuous production improvement. Since modern sensors can instantly detect anomalies and progressions throughout the artificial-lift system, a reciprocating rod-lift pumping unit can now be equipped with an onboard sensory system that is regularly updated by embedded well-engineering models [9].

Figure 7. Components for boosting production autonomously in multiple artificial lift scenarios. Source: https://www.weatherford.com/getattachment/50c1a851-dd89-4e48-83d6-9406d3220a3b/Autonomous-Well-Management-System-Increases-Uptime

The unit will use an information base collected from millions of downhole cards and oilfield simulations and independently determine what improvements can be made to optimise production in response to any fluctuations in production. For instance, if a formation begins releasing additional fluids into a wellbore, this influx will immediately cause the calculations needed to determine the formation of an under-pumped well. A fully automated system can calculate setpoint readjustments and maximise the surge.

3.4 Optimising SAGD

In situ recovery is one of only two methods involved when it comes to extracting oil sands and bitumen. The thermal branch processes that are widely used and economically viable are: steam-assisted gravity drainage — or SAGD —, and cyclic steam stimulation, whilst nonthermal recovery methods include vapour extraction and cold heavy oil production. In Canada, for example, the viability of SAGD comes from the fact that most of the deposits are too deep for mining is also too shallow for high-pressure steam injection processes. Despite the commercial success of thermal in situ processes, they present multiple challenges, including substantial water consumption, high-energy intensity, considerable supply cost, and high GHG emissions [10].

Hence, oil sands greenfield operations that use SAGD production method with notoriously difficult geology and limitations to predict the output based on steam input are specifically interested in an optimisation feature. It is crucial for those looking for ways to reduce operating costs and optimise steam to oil ratio, which that feature is ultimately intended to achieve. At some point, digitalised oilfields in this context are already seeing +99 per cent prediction accuracy with the use of artificial intelligence using a hybrid model that is not a typical data-driven machine learning but rather a physics-inspired hybrid. It takes into account thermodynamics, geology, and rendered results of an unprecedented level of precision.

4 Big Data for Health and Safety

4.1 A top priority

Health and safety is on top of mind of any oil and gas executive and its number one priority to keep operations safe. Data solutions are providing health and safety professionals with the unique ability to go through decades of incident reports and find root causes for similar incidents and mitigation strategies that have been effective in the past. Overall, they can help today’s work on designing policies that prevent incidents and design safer operational practices.

4.2 Analysing historical safety data

Data analytics and deep learning can therefore enhance the implementation of process safety best practice and improvements to reduce the significant loss of containment risks. During safety investigations, what used to take several days of cross-referencing and searching can now be done in a matter of hours. In other words, a ‘super-computer’ can provide oil and gas personnel with valuable process safety insights at first hand, allowing them to learn from past experiences more efficiently and effectively.

Initially, IBM’s Watson system was primarily focused on cognitive capabilities, which is indeed the ability to comprehend, as we call it, unstructured data in documents or natural speech. But now Watson provides a comprehensive environment that combines machine learning tools and is extensively used to interact with programs and languages like TensorFlow, Python, or any other open-source tooling that most data scientists in the industry tend to use and are familiar with.

IBM’s Watson systems have ingested more than 30 years of Woodside’s health and safety data with a reported 80 per cent reduction in time taken to analyse safety incident information [7]. The HSEQ team receives nearly 10,000 safety alert cards a month at one of their gas plants, manually going through them would take hours, possibly days. What Big Data solutions allow HSEQ to do is analyse the cards to obtain feedback on the permit process, and then provide those insights to site controllers to drive action and improvement almost immediately.

4.3 Assessing community health risks

A recent study by the United States Environmental Protection Agency — US EPA — Integrated Risk Information System indicated and increased carcinogenic potential for community exposures to airborne ethylene oxide around facilities using the gas. The ethylene oxidation reaction is exothermic and, if this heat is not released in time, the reaction may be violent. A large amount of heat accumulated may result in threats to human health, loss of assets, or toxic discharges to the environment.

Figure 8. A decision tree algorithm application: Ethylene Oxide Safety and Environmental Protection. Source: [11]

4.4 Monitoring field workers

Other solutions already on the market are the “guardian angel devices” that are leveraging sensors embedded in vests or helmets that monitor vitals of field workers and alert them into either unsafe location or unsafe conditions, as well as physical distress that the worker can be in. By doing so, they provide an appropriate and effective way of keeping the oil and gas operations safe. Operators are also keen on putting their cleansed safety data as a seed to encourage community sharing.

5 Further Research Areas & Limitations

5.1 Modelling nanoflows

Solutions described so far represent work already completed, or that has been done over the last years within the oil and gas industry. But researchers are currently focusing on digital rock, nanoscale flow analysis, and modelling using a combination of machine learning capabilities. At IBM, for example, experts are extracting the geological rock characteristics to create an affordable thin layer that allows conducting pore networks analysis, and several experiments monitoring nano-level flows — something that is poorly described in other primary physics models [4]. Eventually, this would lead to an effective simulation that helps determine the production capacity of a particular geological formation where those nanoflows occur. There is hope on this capability bringing much greater certainty of productions in hard-to-estimate sites.

5.2 Smart filtration

There is also a so-called “self-adaptive water filtration” application of AI. For instance, within Water Planet’s IntelliFlux software, machine learning is used to analyse continuous data from flow and pressure sensors to determine optimal performance of filtration systems deployed in high-variability water environments for oil & gas [12]. An AI-based software can therefore be used to control membrane filtration, ultrafiltration, nanofiltration, reverse osmosis membranes, and any other filtration media where periodical backwashing, cleaning or regeneration are required. After running a series of demonstrations using waters provided by a group of California oil producers to evaluate treating produced water for potential use in agricultural irrigation, it is now focused on scaling up its business model. Artificial Intelligence-guided adaptive cleaning for membrane and filtration processes are yet expected to make treatment plants more efficient, reliable and economical.

5.3 Virtual assistants

Extracting insight from across this environment is an on-demand job for Data Analytics systems. Engineers are creating personal virtual assistants using simple, natural language voice or text commands. In seconds, these tools can search millions of files for relevant information that might otherwise take days, weeks or even months to find.

Willow, for instance, is an Alexa-like virtual avatar that lets staff interact with all of Woodside’s enterprise systems using natural language voice commands. It will search for all the company’s systems and data reserves, drawing relevant answers from more than 60 years of history. People continue to play a vital role in our cognitive and artificial intelligence work, as Willow learns with each interaction and piece of feedback from staff.

5.4 Drones and remote sensing

Oil and gas distribution pipelines require monitoring for maintenance and safety to prevent equipment failure and accidents. Monitoring oil and gas pipeline networks requires periodic assessment of the functioning and physical state of the pipes to minimise the risk of leakage, spill and theft, as well as documenting actual incidents and the effects produced on the environment. Drones, also known as Unmanned Aerial Vehicles (UAVs), are emerging as an opportunity to supplement current monitoring systems.

Drone production is growing extremely fast. In ten years, industrial inspection in oil and gas, energy, infrastructure and transportation will be the dominating purpose of drones worldwide [13]. In the context of Big Data, the development of drones and associated operating systems provides a good example of the fusion of technologies: technological progress could lead to improved simulation models, new materials, and information and communication technologies including AI, miniaturised electronics, and wireless 5G communication. All of these will have straightforward implications for both current and future drone capabilities.

Drone tech and Big Data are fueling an array of enterprise initiatives. In general, drones are comparatively low-cost and easy to deploy in mapping and data-collection missions. They can be programmed, which also facilitates their use. The type of sensor carried by UAVs determines the sort of data acquired and the obtainable information. Through research and operational cases, they are demonstrating the capacity to support the inspection and monitoring of oil and gas pipelines. Prototype systems to monitor pipelines are reviewed in the literature, and some monitoring scenarios are proposed and illustrated [14], [15], [16].

Figure 9. Schematic illustration of monitoring scenarios. Source: https://link.springer.com/article/10.1007/s12517-017-2989-x/figures/2

5.5 Why data science might fail in oil and gas

Big Data on its own is not a silver bullet for oil and gas companies. Today, we are seeing a lot of analytics and vendors running around selling predictive maintenance to big industrial companies, but what these companies seem to miss is real domain competence embedded into their teams. That means having someone that is used to working with these machines and is working at the company fulltime – this is important for several reasons.

Firstly, to tell when a machine is operating as it should, and when it is not, is hard work that requires a share of an engineer’s time.

Secondly, the user has to know how the various sensors relate to each other to be good at predictive maintenance. The user has to be able to tell which sensor tells something about a mechanical issue and which sensor can say something about a performance issue, for instance. If the user does not know that, then the typical noise levels of these sensors will lead the data scientist to overfit their model. The last one is almost always a problem, and that is why the use of physics-based models is recommended to augment machine learning capabilities and deliver real value at scale.

6 Conclusion

Industry 4.0 reflects the next revolution of industrial innovation and now presents new opportunities for raising oilfield production performance to new standards of efficiency, control, and recovery.

As we have seen through this article, such digital innovations in data analytics are highly adaptable, the critical drivers for its adoption are the following:

- Data analysis: rapid analysis of massive volumes of divergent data

- Traceability: fact-based and traceable reasoning

- Expertise: insight and expertise retained within the company

- Decision-making: improved decision-making with greater certainty

- Innovation: democratised innovation by scaling knowledge

One of the most impactful among them is the fact that the knowledge of the company’s best practices and expertise of the most senior and experienced people is captured and retained through Big Data systems in the corporation. This is the expertise that never retires and never leaves. The more is being used, the better it gets; the more experts it interacts with, the more data it is being fed, providing higher quality recommendations that drive operations to the new levels of operational performance. With that, it is probably one of the closest contributors to sustain competitive advantage technology can provide.

Experts and trend research suggests that those who will be at the forefront of the oil and gas industries are those able to generate energy for the lowest possible price. By digitising their upstream and downstream networks, companies are cutting costs and improving productivity in their supply chains. The advent of the “digital oil field” has helped produce cost-effective energy while addressing safety and environmental concerns. The essential elements that comprise an Oilfield 4.0 are advanced data analytics, the internet of things (IoT), cloud computing, artificial intelligence, deep learning, and edge connectivity. Oil & gas companies have seen a range of assets from adopting Big Data and analytics. Some key benefits of Big Data analytics include being able to visualise a drilling map, driving the drill most effectively and efficiently, or assessing the safety and environmental risk of oil and gas workers and the surrounding community.

7 References

[1] https://www.geoinsights.com/what-is-big-data/

[2] Nguyen, T., Gosine, R. G., & Warrian, P. A Systematic Review of Big Data Analytics for Oil and Gas Industry 4.0. IEEE Access, 8, 2020, 61183-61201.

[3] BP Energy, “BP Energy Outlook 2019,” BP Publishers, 2019. [Online]. Available: https://www.bp.com/content/dam/bp/business-sites/en/global/corporate/pdfs/energy-economics/energy-outlook/bp-energy-outlook-2019.pdf

[4] https://www.ibm.com/industries/oil-gas/big-data-analytics

[5] https://www.hornetcorp.com/post/the-importance-of-big-data-analytics-for-the-oil-and-gas-industry

[7] https://www.woodside.com.au/innovation/data-science

[8] https://www.itnews.com.au/feature/the-mechanics-of-woodsides-watson-environment-440875

[10] Nduagu, E., Alpha Sow, Umeozor, E., & Millington, D. (2017). Economic potentials and efficiencies of oil sands operations: processes and technologies. Canadian Energy Research Institute.

[11] Jin, H., Lin, X., Cheng, X., Shi, X., Xiao, N., & Huang, Y. (Eds.). (2019). Big Data: 7th CCF Conference, BigData 2019, Wuhan, China, September 26–28, 2019, Proceedings (Vol. 1120). Springer Nature.

[13] Stankovic, M., Hasanbeigi, A., Neftenov, M. N., Ventures, T. I., Basani, M., Núñez, A., & Ortiz, R. Use of 4IR Technologies in Water and Sanitation in Latin America and the Caribbean. 2020. Available: https://publications.iadb.org/publications/english/document/Use-of-4IR-Technologies-in-Water-and-Sanitation-in-Latin-America-and-the-Caribbean.pdf

[14] Gao, J., Yan, Y., & Wang, C. Research on the application of UAV remote sensing in geologic hazards investigation for oil and gas pipelines. In ICPTT 2011: Sustainable Solutions For Water, Sewer, Gas, And Oil Pipelines (pp. 381-390).

[15] Mezghani, M. M., Fallatah, M. I., & AbuBshait, A. A. From drone-based remote sensing to digital outcrop modeling: Integrated workflow for quantitative outcrop interpretation. J. Remote Sens. GIS, 2018, 7, 1000237.

[16] Gómez, C., & Green, D. R. Small unmanned airborne systems to support oil and gas pipeline monitoring and mapping. Arabian Journal of Geosciences, 2017, 10(9), 202.

To all knowledge

To all knowledge